Views on the impacts of our proposals

Across the five calls for evidence, we asked respondents answering on behalf of organisations about the impact of our proposals. This asked respondents to provide information on the likely costs and benefits of our proposals and whether these would affect organisations’ ability to offer services to the UK market (if at all).

Some comments provided by respondents referred to issues based in the law, rather than the regulatory approach we proposed in the calls for evidence.

When we asked respondents whether our regulatory position would result in additional impacts:

- 15 respondents thought the proposals would result in benefits for their organisation;

- nine respondents thought the proposals would result in costs or burdens to their organisation;

- 35 respondents thought there would be costs and benefits;

- 13 respondents thought there would be neither; and

- 16 respondents were unsure.

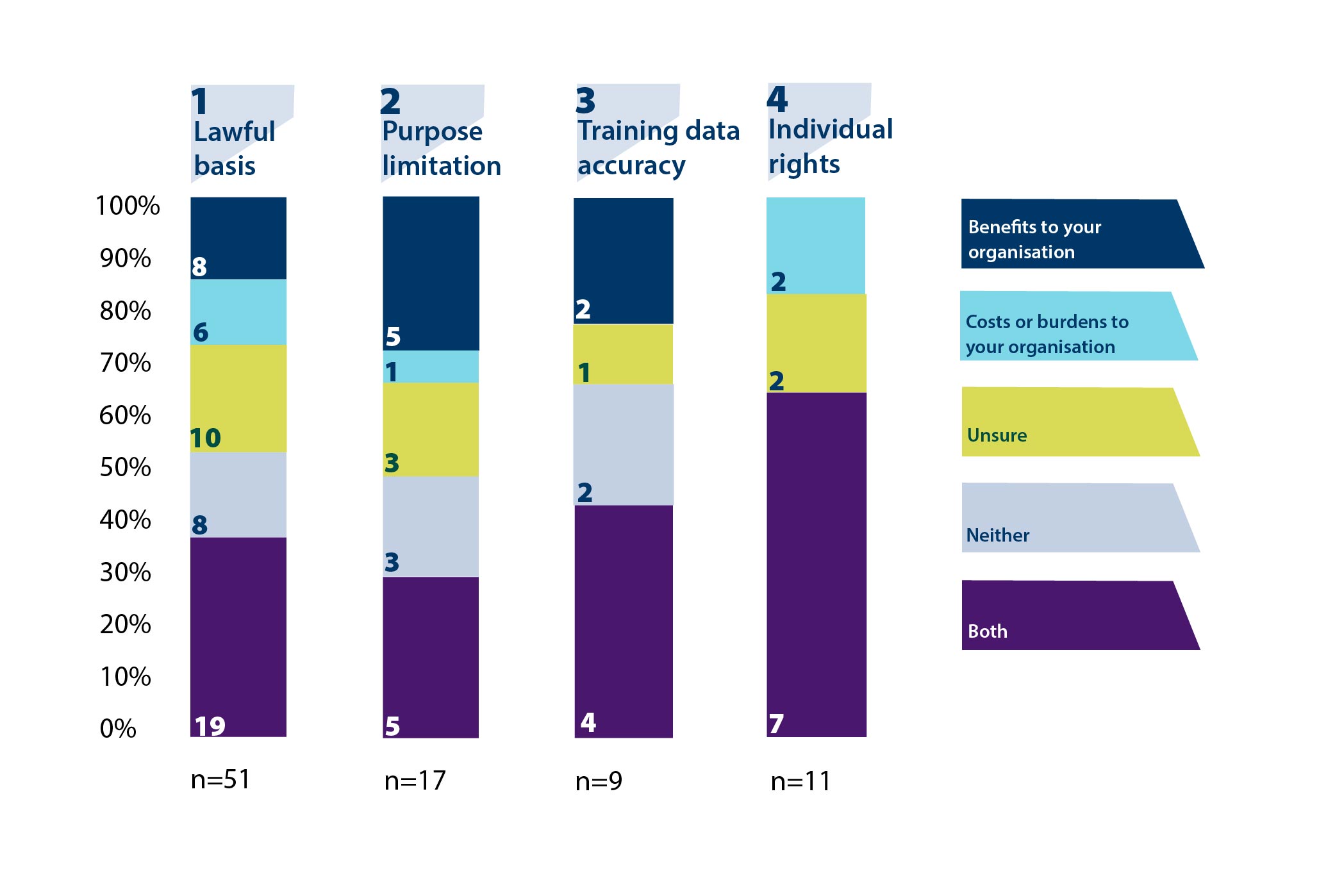

Figure 2, below, shows the breakdown across the calls for evidence. Please note that sample sizes varied throughout according to the number of respondents that provided a response to each question.60

Figure 2: Do you think the proposed regulatory approach will result in benefits or costs for your organisation?

Source: ICO analysis.

Note: Controllership is not included in Figure 2 as the framing of impact related questions differed for the controllership call for evidence. Refer to section 4.1.5 for discussion of impact responses on controllership.

Across the five calls for evidence, the overall balance of sentiment was inconclusive in terms of who provided a response around whether our approach would result in additional costs or benefits. As shown in Figure 2, the majority (35, 39%) of respondents that answered the impact questions identified that our regulatory approach would result in both costs and benefits for organisations. However, for our positions on lawful basis, purpose limitation and accuracy of training, the net anticipated impact was positive excluding those who were unsure.

Table 1 highlights the main impacts of the proposals that respondents identified across the five calls for evidence. We asked respondents to select from multiple choice questions to identify which impacts would result from our proposals.61 Few respondents elaborated further on the details of anticipated costs or benefits beyond the level of detail set out in Table 1.

The main benefits identified noted that the proposals would result in improved:

- regulatory certainty for their organisation (39 respondents); and

- public trust in the adoption of generative AI models (30 respondents).

The majority of respondents that identified costs highlighted that the proposals would result in increased:

- time costs of understanding and implementing the regulatory approach (20 respondents);

- costs associated with making changes to organisations’ business models (10 respondents); and

- resource costs associated with people exercising their information rights (seven respondents).

A small number (three) of respondents commented on how the proposals would affect their ability to offer services to the UK market. Two of these were received for the lawful basis call for evidence, where respondents highlighted that:

“We consider it inappropriate to require the developer to impose downstream controls, which would have a fundamental chilling effect on any development of AI that is open source or open innovation in the UK.”

(The need to detail specific processing purposes at each stage of the AI lifecycle may) “inhibit firms’ ability to develop and deploy Gen-AI in practice”.

Similarly, one respondent to the controllership call for evidence also commented that:

“if the understanding of when a developer/provider of AI is to be considered as (joint) controller was to be broadened, this may significantly increase the obligations to comply with data protection law and constitute an additional obstacle to making AI services available on the market.”

On the basis of the feedback received, it is challenging to make inferences on what the proposals mean for the market as a whole given the small sample size and representativeness of respondents.

Table 1: Summary of impact responses62

|

Impacts |

1: Lawful basis |

2: Purpose limitation |

3: Accuracy of training data |

4: Individual rights |

Total |

|

|---|---|---|---|---|---|---|

|

Benefits |

Providing regulatory certainty to your organisation |

16 |

10 |

5 |

8 |

39 |

|

Improved public confidence in the adoption of generative AI models |

12 |

9 |

4 |

5 |

30 |

|

|

Reputational benefits from a reduced risk of data protection harms |

12 |

7 |

3 |

5 |

15 |

|

|

Other |

7 |

1 |

1 |

3 |

12 |

|

|

Costs |

Resource costs of defining purpose for each processing activity |

5 |

5 |

|||

|

Resource costs of people exercising their information rights |

7 |

7 |

||||

|

Time-costs of understanding and implementing the regulatory approach |

4 |

6 |

3 |

7 |

20 |

|

|

Costs associated with making changes to your organisation’s business model |

3 |

2 |

2 |

3 |

10 |

|

|

Costs of assessing the accuracy of training data |

3 |

3 |

||||

|

Costs of communicating the accuracy of model outputs to end-users |

2 |

2 |

||||

|

Costs of accessing proprietary datasets for model training |

2 |

2 |

||||

|

Other |

3 |

1 |

1 |

2 |

7 |

|

Source: ICO analysis. Multiple responses permitted.

Note: The impact categories that organisations were asked to identify varied across each call for evidence. Impacts which were not relevant for a specific call for evidence are denoted by the shaded boxes.

Respondents that selected “other” costs or benefits provided some additional details. For benefits, this included:

- a better ability to define organisational strategy;

- increased confidence around risk management; and

- reduced regulatory burden.

Some of the costs identified included:

- additional legal costs;

- reduced ability to innovate; and

- increased development costs.

We explain these in more detail in the next section.

60 Some respondents only provided responses to certain questions which is reflected in the sample size as a result.

61 Impacts that organisations were asked to identify varied across each call for evidence. Impacts which were not relevant for a specific call for evidence are denoted by the shaded boxes in Table 1.

62 Controllership is not included in Table 1 as the framing of impact related questions differed for the Controllership call for evidence, limiting comparability with previous calls for evidence. Refer to section 4.1.5 for discussion of impact responses relating to controllership.