Wider information rights issues

From a privacy and information rights perspective, many of the immediately apparent issues associated with applications of quantum technologies are similar to those raised by classical technologies. But, in some cases, the unique physical characteristics of quantum information, and new capabilities, could exacerbate existing privacy and information rights issues, or introduce new issues.

Cross-cutting issues

1. Identifying personal information processing and implementing data protection by design and default

UK GDPR applies to the processing of personal information, which is information that relates to a living person who can be directly or indirectly identified from the information. Organisations that process personal information as part of a use case need to embed privacy by design and default. They also need to consider information governance and individual rights.

Determining whether or not a quantum technology use case involves processing personal information may not always be straightforward. Our Tech horizons report chapter on quantum computing highlighted that uncertainty around future use cases and the types of training data in use can be a complicating factor. Other factors include what algorithm the computer is solving, and the information that algorithm needs in order to work. For uses of quantum sensing and imaging techniques, it could be challenging to identify what information the novel or increased capabilities are collecting, and how this information interacts with other data streams.

These are not challenges unique to quantum - we often provide input on such questions in our Sandbox. But, in future, the novelty and complexity of quantum concepts and technology could be an additional barrier to engaging internal teams on basic data protection questions.

Nonetheless, the data protection approach should not be any different just because a technology is ‘quantum’. Data protection obligations only come into play if the information relates to an identifiable living person.

Taking the example of quantum sensing and imaging techniques, it is not clear whether the following would collect personal information:

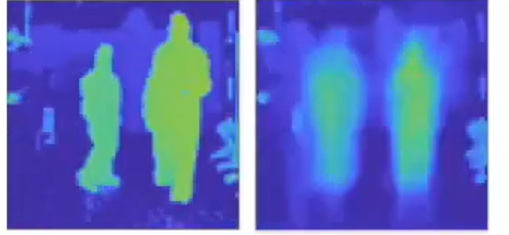

- Quantum imaging techniques that can see around corners and detect the presence of a person or object outside the line of sight can, at least at present, generate a low resolution outline of a person moving through a space, and an outline of other objects. See Image A. In this case, it is possible that with advances in machine learning techniques, the data collected could in future lead to far more detailed images. This could mean people are identifiable. Research is also exploring approaches that could, in future, detect things ‘through’ some surfaces such as bone. Information from these techniques could also be combined with other information 41.

- Quantum magnetic sensors may be able to detect the presence of certain objects through walls and people in spaces. The level of detail they can collect is unclear.

- A quantum gravity sensor can map shapes and objects over large areas. At the moment, it might be able to detect the presence of a person, but nothing more.

Detecting the presence of an unidentifiable person, without more detail, is unlikely to fall within scope of data protection legislation. But, other factors may inform the assessment of whether a person is identifiable, and therefore whether personal information is being processed. For example:

- the context;

- what other information an organisation has; and

- what other processing is taking place (eg is information being combined with information from other sensors being used on the device) will inform whether or not personal information is being processed.

For example, if quantum imaging that can see around corners or behind walls was deployed for directed surveillance, the organisation is likely to have further information that would identify the person. This means that they would likely be processing personal information. If such a device was deployed in CCTV in a public space such as a hospital, the organisation may hold additional information about patients and staff. Therefore, capturing a low resolution outline (as opposed to a detailed image) might involve processing personal information. But this is not clear-cut.

Image A: Quantum imaging around corners or behind walls 42.

Image B: High-speed 3D Quantum imaging using single particles of light.

In other cases, identifying processing of personal information is likely to be obvious, for example:

- Using a quantum magnetic sensor for non-invasive brain scanning or quantum imaging techniques for medical diagnostics. The results of the scan or imaging will clearly relate to the person being scanned.

- Capturing an identifiable person using certain low light quantum imaging techniques. The technique generates a detailed 3D image when the information from individual light particles is stitched together using machine learning. For example, Image B, if used to capture a whole face, captures a greater level of detail that appears more likely to involve identifiable information.

In many cases, these technologies may be embedded within a wider system, such as an autonomous vehicle or robot. What is important is that organisations deploying these technologies, or systems using these technologies, have a full understanding of:

- what information the sensor or camera can collect;

- how they are going to use the sensor or camera (and system); and

- what other information they may have or be able to access (eg information from other sensors or inferences from machine learning).

This will help them to:

- identify whether they are processing personal information;

- unpack what the potential impacts may be on people’s rights and freedoms; and

- take appropriate action.

A DPIA is a useful starting point.

Quantum sensing, timing and imaging issues

1. Risk of surveillance and data minimisation

One concern about the emerging capabilities of quantum sensing and imaging technologies is that they could open further avenues for overt or covert surveillance, or excessive information collection.

In particular, certain techniques:

- can collect information in new ways;

- can collect information with even greater degrees of precision, at further distances; and

- in future, could be deployed in very small and portable devices.

The capabilities could be:

- useful in law enforcement (eg to detect at a distance whether someone is carrying a weapon or a weapon is in a building);

- deployed to assist with emergency service recovery or for directed surveillance in national security scenarios (eg to detect objects or movement at a distance or in another building); and

- potentially used to develop certain techniques (such as 360 degree imaging) for CCTV in prisons, hospitals or the home, or to support autonomous vehicle safety.

Some of these use cases could have many benefits. Others could be highly privacy intrusive, even if not processing information about identifiable people. For example, if not deployed and managed responsibly, lawfully, fairly and transparently, such capabilities could open up previously private spaces, such as homes, to unwarranted intrusion. People may also not be aware of, or understand, what information is or is not being collected about them. This can foster mistrust or have a chilling effect on how people behave in public and private spaces. Integrating data protection by design and default provides a robust way to help address surveillance concerns.

Minimising the personal information collected and being transparent about data processing can help increase trust when implementing new technologies that could be used for surveillance.

As noted in the previous section, it is also important to be clear about:

- what new quantum sensing and imaging technologies can and cannot detect; and

- whether or not they materially add any risk to people’s rights and freedoms.

In some use cases, the limitations of a particular sensor could even mean that less identifying information is collected than classical alternatives (eg CCTV).

For example, future autonomous vehicles are likely to rely on a range of different sensors and ultra precise timing and navigation technologies to safely interact with their environment and anticipate obstacles. Next generation quantum imaging and timing technologies could be deployed to further improve these capabilities. For example, by enabling a 360 degree view of the environment and obstacles around corners, in real time, even in low light, fog or very cluttered areas 43. It is still unclear whether they could also capture detailed information about a person’s route home or their neighbourhood. But autonomous vehicles are already likely to collect location and other information, whether quantum technologies are used or not. Any additional data protection risk is likely to be cumulative and also depend on an organisation’s information management practices.

2. Use for national security, intelligence and law enforcement purposes

Use of covert surveillance or other covert investigatory powers by public bodies is covered by the Regulation of Investigatory Powers Act 2000 (RIPA) and the Investigatory Powers Act 2016 (IPA). The Investigatory Powers Commissioner’s Office, not the ICO, provides independent oversight of the use of all investigatory powers by intelligence agencies, police forces and other public authorities. However, whether such activities are legal under RIPA and IPA will also influence if any associated processing of personal information is lawful under data protection legislation.

But no matter what application or technology is used, organisations processing personal information for national security, intelligence or law enforcement related purposes, still need to comply with the applicable data protection regime, whether that is UK GDPR or Part 3 (Principles - Law Enforcement Processing) or Part 4 of the DPA18 (and Principles - Intelligence Services Processing). This will also apply to relevant future uses of quantum sensing, timing and imaging technologies in these settings.

The data protection legislation provides exemptions which are applicable to specified provisions. For example, an organisation may seek to rely on an certain exemption where required to:

- safeguard national security; or

- avoid some form of harm or prejudice, such as impacting on the combat effectiveness of the UK’s armed forces 44.

But none are blanket exemptions. In each case, the processing must also still be lawful (eg it may be subject to a warrant, or Ministerial authorisation). An organisation seeking to rely on the exemption also still needs to comply with their accountability and security obligations 45. Organisations that may often rely on exemptions should also regularly review their processing to avoid expanding the scope of the processing unnecessarily (also known as “scope creep”).

Quantum computing issues

1. Accuracy, fairness and explainability

Data protection law sets out an obligation for organisations to take reasonable steps to ensure personal information they process is accurate (ie not misleading as to any matter of fact). The outputs of many machine learning systems are not facts, they are “intended to represent a statistically informed guess” about something or someone 46.

Much like many machine learning models, quantum computers are probabilistic. This means that they will give the “most likely” answer, rather than a definitive one. Calculations on current quantum computers are also prone to errors, because qubits are fragile and impacted by interference from the outside world (noise). This fragility does not introduce inaccuracies - it stops the whole computer from working. But, verifying the accuracy of quantum calculations is very complex, and technical explainability frameworks for quantum machine learning have not been developed yet.

If an organisation makes an inference about a person using the output from a quantum computer, the inference does not need to be 100% accurate to comply with the accuracy principle. The organisation needs to record details such as:

- the fact the inference was made as a result of an analysis by a quantum computer;

- the inference is a “best guess”, not a fact; and

- relevant details about the limitations of that analysis.

But, the accuracy of the system’s output (we call this statistical accuracy) is relevant to an organisation’s fairness obligations.

If an organisation wants to rely on an inference from a quantum computing output to make a decision about someone, they need to know that the calculation and the inference is sufficiently statistically accurate for their purposes. The calculation needs to be “sufficiently statistically accurate to ensure that any personal data generated by it is processed lawfully and fairly.” 47 Under the fairness principle, organisations should handle personal information in ways that people would reasonably expect and not use it in ways that have unjustified adverse effects on them.

At the moment, it is possible to test statistical accuracy and demonstrate whether quantum computing calculations are more accurate than equivalent classical models using existing classical computing methods. However, in future, it is not yet clear how to assure and demonstrate computational accuracy, especially to a non-expert, once quantum computing capabilities sufficiently outstrip those of a classical computer. For example, if it would take a classical computer decades to solve the problem) 48. This is still an active area of research.

This could potentially pose some challenges for organisations to comply with their fairness obligations, depending on what they are using the quantum computer for. It could be relevant for specific use cases that may involve processing personal information that is likely to result in a high risk to the rights and freedoms of natural persons (high risk processing), where the accuracy of inferences cannot be confirmed without additional investigation. For example, using a quantum computer to detect fraudulent transactions in a complex dataset, or drawing inferences from genomic information. Organisations would need to record these inferences as an opinion.

In such cases, even if the dataset is accurate, there may not always be information available that could ‘prove’ a transaction was fraudulent, or that the inferences made are correct, without further investigation. But, in future, an organisation may intend to take decisions automatically on the basis of the quantum computing analysis (eg, to automatically freeze an account or refuse a credit application). In that case, maximising (and understanding) statistical accuracy becomes very important to ensure fairness.

At the moment, current quantum computers are mainly used to accelerate existing machine learning algorithms. The state of the art and use cases continue to evolve and research continues into assurance and verification. Organisations must ensure they understand and document the limitations of the systems they are using. They must only use systems that are sufficiently statistically accurate for the intended purpose.

2. Transparency, storage and retention

The nature of quantum information is very different to classical information. At the moment, qubits do not last very long because their state ‘collapses’ when observed, measured, or when they are affected by the outside world. They cannot be copied and are currently fragile and prone to errors that collapse the computation.

These physical properties are not features of information in classical computing.

Following on from questions raised in the Tech horizons report, we have explored whether these physical properties of qubits may:

- have implications for an organisation’s data protection obligations; or

- make it harder to fulfil people’s rights in a quantum-enabled future.

For example, we have considered whether:

- the organisations running a quantum computer are still processing personal information if a computation collapses. If the organisation is not, it would no longer have data protection obligations over that information;

- it will be possible, in future, to store quantum information and correct any inaccurate stored quantum information about a person; and

- organisations need to (or are able to) respond to subject access requests if using a quantum computer or hybrid quantum-classical approach to process personal information, and the computation collapses.

From a technical perspective, initial engagement suggests it is still very early days to be considering these questions and they are underexplored. They are only likely to become relevant in the far longer term (10-25 years or more), if we reach the era of universal fault tolerant computers. By that point, some of these questions may have been addressed by anticipated technical developments. There are several reasons for this:

- Increasing stability of qubits: It is anticipated future qubits will be more stable and not introduce the errors they do today 49, some of these issues are less likely to be relevant. Advances in error correction will mean that the information being processed will be spread between qubits. Error correction could be applied at each stage of computation. If there is an error in any one qubit during part of the computation, that error could be corrected without the whole computation collapsing.

- Current and future storage of quantum information: There are not yet reliable ways to store quantum information at scale, for later processing.There are theoretical approaches, but researchers need a larger quantum computer (with more qubits) to implement them. In the short term, if a quantum computer is used to process personal information, data protection principles apply when the processing is proposed and as it is being carried out. The computation may be run multiple times. But, the organisation is not storing quantum information. Therefore, questions do not yet arise about correcting stored personal information or answering subject access requests about any information “held” by a quantum computer. Any decisions an organisation makes about a person as a result of processing by a quantum computer would still be subject to data protection obligations.

- Classical information is converted into quantum information prior to processing: In order to use a quantum computer, classical information needs to be described and transformed (encoded) into a quantum state. Once the computation is complete, it needs to be encoded back to classical information again, to give an answer that can be read by a classical computer. Even if a quantum computation collapses, either the controller or processor is likely to still hold and be processing classical information that they would need to disclose in response to a subject access request.

3. Blind quantum computing, privacy, and harmful processing

For the foreseeable future, people and organisations are likely to access quantum computers remotely, via the cloud. UK researchers are exploring a technique called blind quantum computing. This could add a further layer of security and privacy protection for highly sensitive information processing when using quantum computers. This could include anything from commercial secrets to processing special category data, such as health or genetic information 50.

In the long term (10-15 years), this technique would enable a person or organisation to run an algorithm, get an answer, and verify their results, without the quantum computing cloud server being able to see what they were doing. Their processing would be hidden in a way that was ‘assured’ by quantum physics 51. More specifically, qubits collapse when measured. Therefore, any attempts to copy, intercept or eavesdrop on the communication or processing on the server would collapse the quantum computation or the communication channel, so the information cannot be read 52. If the technique takes off, it could even lead to plug-in devices for people wanting to use quantum computing cloud services from home, with ‘guaranteed’ privacy 53.

Proponents have speculated that this technique could help further enhance the security and privacy of processing using a quantum computer. For example, stakeholders have speculated whether it could be a future way of securely processing sensitive personal information, such as special category data, using quantum computers in other countries, to satisfy UK GDPR rules about transferring information internationally. Whether this is the case, is a question for us to consider further.

Others have highlighted that the technique may also create new challenges for counteracting attempts to use a quantum computer to enable certain types of malicious and criminal activity online. Such potential malicious use cases are extreme scenarios. However, they do raise questions about the balance to be struck between the privacy benefits of blind quantum computing, and the harms that could arise from serious misuse of blind quantum computing capabilities.

From a futures perspective, we may see current concerns about harmful uses play out in a similar way in a quantum-enabled future. For example, we could see emerging discussions around:

- ways to flag dual uses for quantum algorithms, or detect what algorithm a person is wanting to run on a quantum computer;

- proactive scanning for harmful content on a person’s or organisation’s computer (known as client-side scanning); or

- access controls or identity verification when signing up for access to a quantum computer.

We will need to further explore how this issue may develop, and what our approach might be, in a future quantum-enabled world.

Quantum communications issues

1. Securing QKD networks and access to QKD nodes

Pilots of QKD networks are exploring ways to offer future secure communications to help address the risks from a future quantum computer. Researchers are also exploring ways to integrate post-quantum cryptography into QKD networks to help develop ‘end-to-end’ encrypted communication networks 54. This is distinct from blind quantum computing above, as it involves securing information sent through the regular internet (as per chapter four), not just protecting information when accessing a quantum computer.

Our early research suggests the way these networks are set up could also lead to privacy concerns, if not appropriately managed. At the moment, quantum information (such as encryption keys) can be transmitted up to 100km away. The information is transferred between physically secured trusted nodes operated by internet service providers, until it reaches the final destination 55. It is not yet possible to transmit information at longer distances, or internationally, but efforts are ongoing to use satellites for this.

Quantum communications experts noted that it could either create a secure link:

- between two customers; or

- between the customer and internet service provider.

The type of secure link will depend on how the technology develops, how a future QKD network is set up and how it is deployed with PQC.

The first setup is similar to classical end-to-end encryption. Only the intended recipient could decrypt whatever is sent. In the second setup, a service provider could, in theory, intercept and decrypt the quantum secure communication at each “trusted node”. The second option, theoretically, provides additional points of access to communications for:

- a malicious actor; or

- law enforcement or intelligence services to prevent crime or other online harms. This would need to be authorised under the applicable legislation (RIPA or IPA).

Commentators have suggested that if this second approach is adopted, providers cannot claim to offer end-to-end post-quantum security.

At this stage, it is not clear which approach the UK may adopt, but we are alert to the potential privacy risks of the second approach. We are open to engaging further on this issue, should QKD networks continue to develop.

Existing regulation under RIPA, IPA, UK GDPR and PECR set out obligations for telecoms providers to ensure the security of their services, and for agencies seeking access to communications. For example, PECR regulation 5(1A) states that telecoms providers must “ensure that personal information can only be accessed by authorised personnel for legally authorised purposes”. Ensuring that all organisations continue to comply with their data protection and PECR obligations, as well as RIPA, when establishing and running new networks will help maintain people’s trust.

Further reading

41 Nature Perspectives article on Non-line-of-sight imaging (2020); University of Glasgow blog on The nano and quantum world: Extreme light. At the moment, researchers have used the technique to see through up to 5cm of opaque material, such as bone, which they are using to explore brain imaging. Non line of sight imaging could also be combined with information such as the partial information provided by radar and wifi, which can see through certain kinds of wall.

42 QUANTIC videos on sensing and imaging for defence and security; Image A is from the video on non line of sight imaging. Image B is from the video on real time 3D imaging.

43 Nature Perspectives article on Non-line-of-sight imaging (2020); Quantum Sensing and Timing Hub article on transforming detection with quantum-enabled radar; Transforming detection with quantum-enabled radar (quantumsensors.org); Transcript of House of Commons Science and Technology Committee oral evidence: Quantum technologies (2018)

44 See also: ICO guidance on law enforcement processing: National security provisions; ICO guidance on intelligence services processing: Exemptions

45 ICO guidance on intelligence services processing: Exemptions

46 ICO guidance on AI and data protection: What do we need to know about accuracy and statistical accuracy

47 ICO guidance on AI and data protection: What do we need to know about accuracy and statistical accuracy

48 Quantum Computing and Simulation Hub article on Verifiable blind quantum computing with trapped ions and single photons (2023)

49 This type of qubit is known as a logical, error corrected qubit.

50 Quantum Computing and Simulation Hub article on Verifiable blind quantum computing with trapped ions and single photons (2023) The technique involves a person sending a problem to a universal fault tolerant quantum computer via a quantum link (ie, sending information encoded on particles of light), running the problem and receiving the answer to a device attached to a computer via that same link.

51 More specifically, it provides information-theoretic security. Quantum Computing and Simulation Hub article on Verifiable blind quantum computing with trapped ions and single photons (2023)

52 Quantum Computing and Simulation Hub article on Verifiable blind quantum computing with trapped ions and single photons (2023)

53 University of Oxford media release: Breakthrough promises secure quantum computing at home (2024)